As compute demands evolve across industries—from startups training foundation models to biotech teams running genomics pipelines—choosing the right hardware isn't just a technical decision, it's strategic.

At Vantage, we believe cloud HPC should be powerful, transparent, and tailored. This guide highlights core hardware options currently available on the Vantage platform and maps them to real-world use cases to help you select the optimal hardware for your workload.

Here's an overview of hardware commonly used by different workloads.

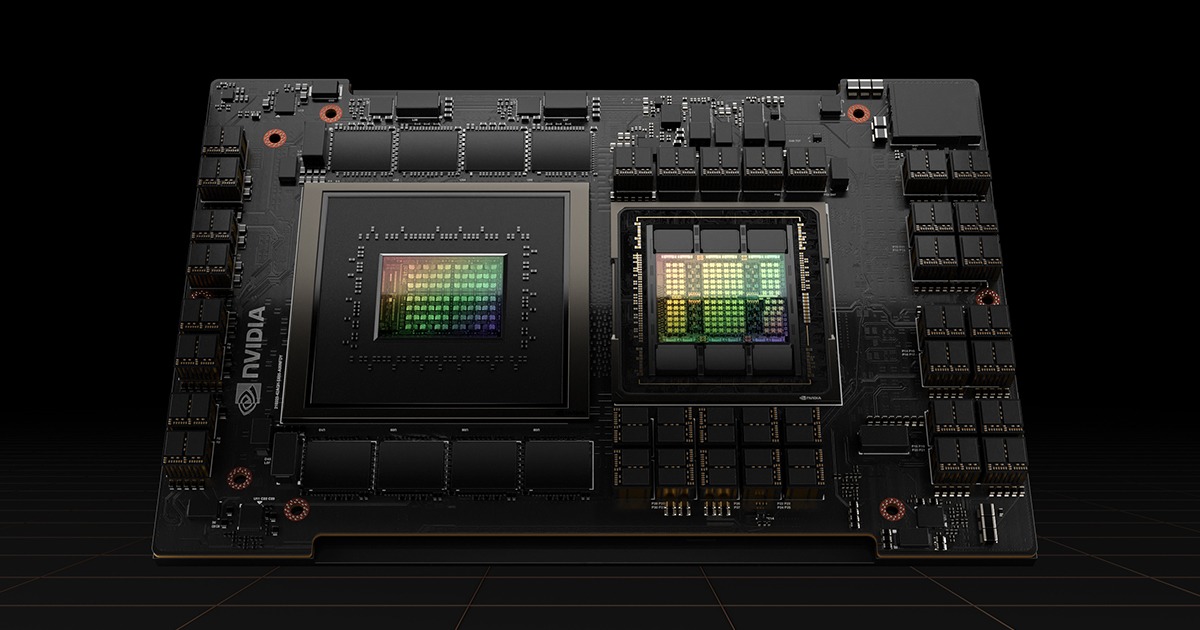

Best for: Foundation model training, dense L/transformer workloads, AI infrastructure

Ideal for: Frontier AI startups, ML researchers, enterprise-scale model development.

Best for: High-performance CPU workloads—simulations, genomics, rendering, data preparation

Ideal for: Research labs, bioinformatics, simulation-intensive applications.

Best for: Inference, training mid-size models, analytics

Ideal for: Fintech, applied AI, NLP inference, early-stage training pipelines.

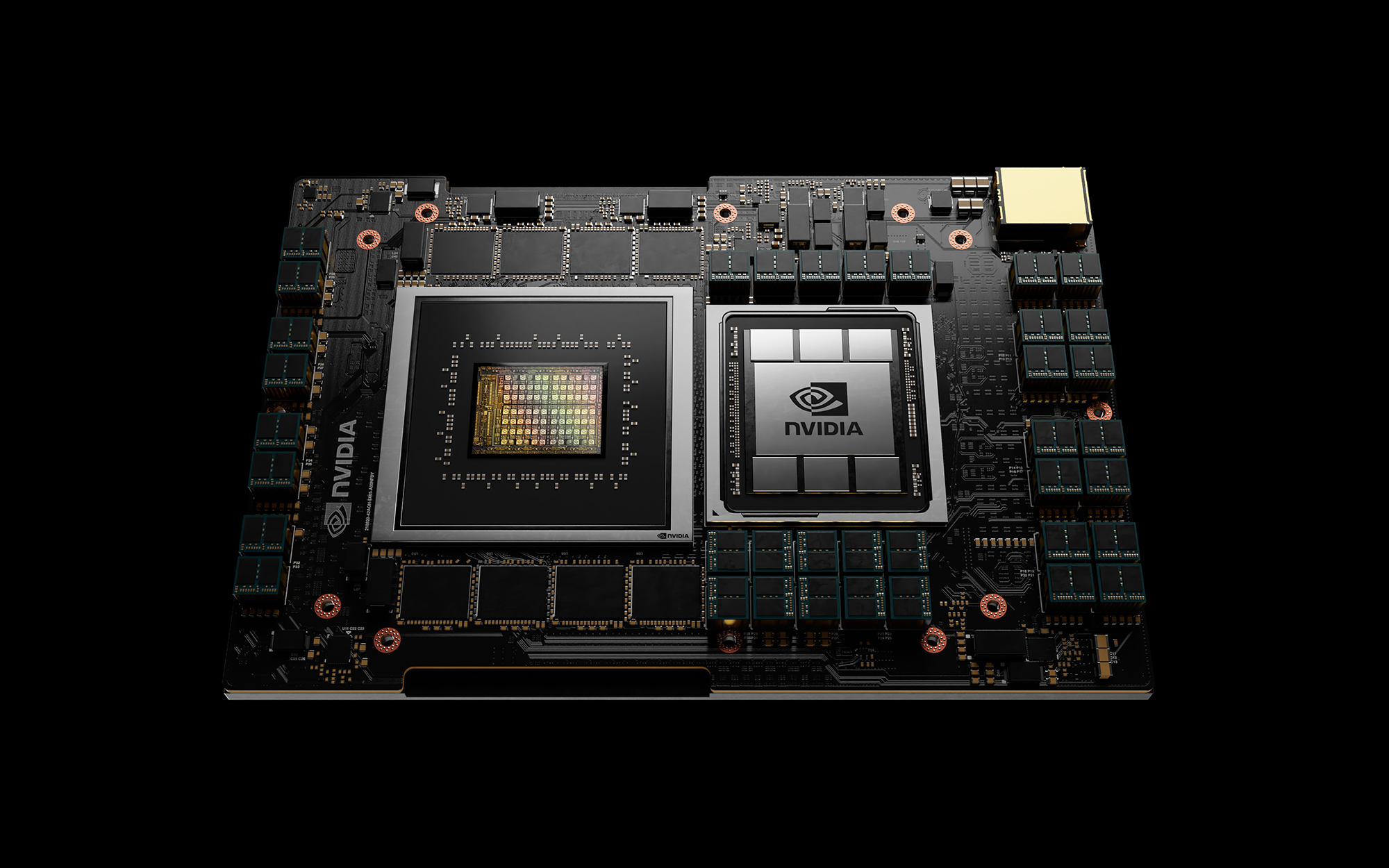

Best for: Large-scale AI/HPC workloads, accelerated compute, hybrid training/inference workloads

Ideal for: Advanced AI research, large-scale HPC deployments, integrated training and inference.

Best for: Power-efficient, scalable workloads, cloud-native and edge computing

Ideal for: Cloud-native applications, edge deployments, effici compute clusters.

Best for: Fast checkpointing, large intermediate files, IOPS-intensive workflows

Best for: Low-latency, high-throughput multi-node workloads (MPI, CFD, etc.)

Using H100 GPUs to train reinforcement learning (RL) models within synthetic simulation environments, enabling faster training cycles and more robust robotic control algorithms.

Leveraging AMD Genoa CPUs to perform genome alignments and bioinformatics analyses, achieving 40% faster results compared to traditional cloud solutions.

Deploying NVIDIA A100 GPUs to serve transformer-based NLP models for real-time inference, consistently achieving latency below 20 milliseconds for financial services.

Employing the NVIDIA Grace Hopper Superchip (GH200) for large-scale autonomous vehicle simulation, AI model training, and real-time inference workloads, significantly speeding up AI-driven vehicle development and validation.

Accelerating aerodynamic modeling and computational fluid dynamics (CFD) using AMD Genoa CPUs and 400G Infiniband networking, dramatically reducing simulation run times and enabling quicker iterative aircraft design processes.

Utilizing ARM-based CPUs for secure, power-efficient edge computing in distributed surveillance and intelligence-gathering applications, optimizing thermal efficiency, and extending operational longevity in challenging environments.

Choosing the right infrastructure involves aligning compute, storage, and networking to your specific workload. Proper alignment reduces waste, enhances performance, and accelerates outcomes:

Compute

Dictates throughput and cost-efficiency, from frontier AI models (H100, GH200) to CPU-heavy genomics (AMD Genoa) and efficient edge deployments (ARM).

Storage

Latest PCIe Gen5 NVMe storage enables rapid checkpointing and high-speed data handling, crucial for intensive AI workloads and real-time inference.

Networking

400G Infiniband and RoCEv2 ensure minimal latency and maximum bandwidth, optimal for MPI-based HPC workloads and distributed simulations.

Smart hardware choices shape outcomes, reducing time-to-value and overhead for HPC and AI/ML workloads.